• GenAI

- Text: Chat, Q&A, Compose, Summarize, Think, Search, Insights, Research

- Image: Gen, Identify, Search (Image-Image, Text-Image, etc), Label, Multimodal

- Code gen

- Research: Projects, Science, Breakthroughs

- MoE

• Agentic

- Workflows: GenAI, DNN, Scripts, Tools, etc combined to fulfil Objectives

-- Auto-Generated Plans & Objectives

- Standardization: MCP (API), Interoperability, Protocols

- RAG

- Tools: Websearch, DB, Invoke API/ Tools/ LLM, etc

• Context

- Fresh/ Updated

- Length: Cost vs Speed trade-off

- RAG

- VectorDB (Similarity/ Relevance)

- Memory enhanced

• Fine Tune

- Foundation models (generalists) -> Specialists

- LoRA

- Inference time scaling (compute, tuning, etc)

- Prompts

• Multimodal: Text, Audio, Video, Image, Graph, Sensors

• Safety/ Security

- Output Quality: Relevance, Accuracy, Correctness, Evaluation (Automated Rating, Ranking, JudgeLLM, etc)

-- Hallucination

- Privacy, Data Leak, Backdoor, Jailbreak

- Guard Rails

Insights on Java, Big Data, Search, Cloud, Algorithms, Data Science, Machine Learning...

Wednesday, October 8, 2025

AI/ML '25

Thursday, April 17, 2025

On Quantization

- Speed vs Accuracy trade off.

- Reduce costs on storage, compute, operations .

- Speed up output generation, inference, etc.

- Work with lower precision data.

- Cast/ map data from Int32, Float32, etc 32-bit or higher precision to lower precision data types such as 16-bit Brain Float (BFloat16) or 4-bit (NFloat)/ int4 or int8, etc.

- Easy mapping Float32 (1-bit Sign, 7-bit Exponent, 23-bit Mantissa) => BFloat16 (1-bit Sign, 7-bit Exponent, 7-bit Mantissa). Just discard the higher 16-bits of mantissa. No overflow!

- Straightforward mapping works out max, min, data distribution, mean, variance, etc & then sub-divide into equally sized buckets based on bit size of the lower precision data type. E.g int4 (4-bit) => 2^4 = 16 buckets.

- Handle outliers, data skew which can mess up the mapping, yet lead to loss of useful info if discarded randomly.

- Work out Bounds wrt Loss of Accuracy.

LLMs, AI/ ML side:

- https://newsletter.theaiedge.io/p/reduce-ai-model-operational-costs

Lucene, Search side:

- https://www.elastic.co/search-labs/blog/scalar-quantization-101

- https://www.elastic.co/search-labs/blog/scalar-quantization-in-lucene

Tuesday, April 8, 2025

Revisiting the Bitter Lesson

Richard Sutton's - The Bitter Lesson(s) continue to hold true. Scaling/ data walls could pose challenges to scaling AI general purpose methods (like searching and learning) beyond a point. And that's where human innovation & ingenuity would be needed. But hang on, wouldn't that violate the "..by our methods, not by us.." lesson?

Perhaps then something akin to human innovation/ discovery/ ingenuity/ creativity might be the next frontier of meta-methods. Machines in their typical massively parallel & distributed, brute-force, systematic trial & error fashion would auto ideate/ innovate/ discover solutions quicker, cheaper, better. Over & over again.

So machine discoveries shall be abound, just not Archimedes's Eureka kind, but Edison's 100-different ways style!

Sunday, April 6, 2025

Model Context Protocol (MCP)

Standardization Protocol for AI agents. Enables them to act, inter-connect, process, parse, invoke functions. In other words to Crawl, Browse, Search, click, etc.

MCP re-uses well known client-server architecture using JSON-RPC.

Apps use MCP Clients -> MCP Servers (abstracts the service)

Kind of API++ for an AI world!

Saturday, April 5, 2025

Open Weight AI

Inspired by Open Source Software (OSS), yet not fully open...

With Open Weight (OW) typically the final model weights (& the fully trained model) are made available under a liberal free to reuse, modify, distribute, non-discriminating, etc licence. This helps for anyone wanting to start with the fully trained Open Weight model & apply them, fine-tune, modify weights (LoRA, RAG, etc) for custom use-cases. To that extent, OW has a share & reuse philosophy.

On the other hand, wrt training data, data sources, detailed architecture, optimizations details, and so on OW diverges from OSS by not making it compulsory to share any of these. So these remain closed source with the original devs, with a bunch of pros & cons. Copyright material, IP protection, commercial gains, etc are some stated advantages for the original devs/ org. But lack of visibility to the wider community, white box evaluation of model internals, biases, checks & balances are among the downsides of not allowing a full peek into the model.

Anyway, that's the present, a time of great flux. As models stabilize over time OW may tend towards OSS...

References

- https://openweight.org/

- https://www.oracle.com/artificial-intelligence/ai-open-weights-models/

- https://medium.com/@aruna.kolluru/exploring-the-world-of-open-source-and-open-weights-ai-aa09707b69fc

- https://www.forbes.com/sites/adrianbridgwater/2025/01/22/open-weight-definition-adds-balance-to-open-source-ai-integrity/

- https://promptengineering.org/llm-open-source-vs-open-weights-vs-restricted-weights/

- https://promptmetheus.com/resources/llm-knowledge-base/open-weights-model

- https://www.agora.software/en/llm-open-source-open-weight-or-proprietary/

Wednesday, April 2, 2025

The Big Book of LLM

A book by Damien Benveniste of AIEdge. Though a work in progress, chapters 2 - 4 available for preview are fantastic.

Look forward to a paperback edition, which I certainly hope to own...

Tuesday, April 1, 2025

Mozilla.ai

Mozilla pedigree, AI focus, Open-source, Dev oriented.

Blueprint Hub: Mozilla.ai's Hub of open-source templtaized customizable AI solutions for developers.

Lumigator: Platform for model evaluation and selection. Consists a Python FastAPI backend for AI lifecycle management & capturing workflow data useful for evaluation.

Friday, March 28, 2025

Streamlit

Streamlit is a web wrapper for Data Science projects in pure Python. It's a lightweight, simple, rapid prototyping web app framework for sharing scripts.

- https://streamlit.io/playground

- https://www.restack.io/docs/streamlit-knowledge-streamlit-vs-flask-vs-django

- https://docs.streamlit.io/develop/concepts/architecture/architecture

- https://docs.snowflake.com/en/developer-guide/streamlit/about-streamlit

Saturday, March 15, 2025

Scaling Laws

Quick notes around Chinchilla Scaling Law/ Limits & beyond for DeepLearning and LLMs.

Factors

- Model size (N)

- Dataset size (D)

- Training Cost (aka Compute) (C)

- Test Cross-entropy loss (L)

The intuitive way,

- Larger data will need a larger model, and have higher training cost. In other words, N, D, C all increase together, not necessarily linearly, could be exponential, log-linear, etc.

- Likewise Loss is likely to increase for larger datasets. So an inverse relationship between L & D (& the rest).

- Tying them into equations would be some constants (scaling, exponential, alpha, beta, etc), unknown for now (identified later).

Beyond common sense, the theoretical foundations linking the factors aren't available right now. Perhaps the nature of the problem is it's hard (NP).

The next best thing then, is to somehow work out the relationships/ bounds empirically. To work with existing Deep Learning models, LLMs, etc using large data sets spanning TB/ PB of data, Trillions of parameters, etc using large compute budget cumulatively spanning years.

Papers by Hestness & Narang, Kaplan, Chinchilla are all attempts along the empirical route. So are more recent papers like Mosaic, DeepSeek, MoE, Llam3, Microsoft among many others.

Key take away being,

- The scale & bounds are getting larger over time.

- Models from a couple of years back, are found to be grossly under-trained in terms of volumes of training data used. They should have been trained on an order of magnitude larger training data for an optimal training, without risk of overfitting.

- Conversely, the previously used data volumes are suited to much smaller models (SLMs), with inference capabilities similar to those older LLMs.

References

- https://en.wikipedia.org/wiki/Neural_scaling_law

- https://lifearchitect.ai/chinchilla/

- https://medium.com/@raniahossam/chinchilla-scaling-laws-for-large-language-models-llms-40c434e4e1c1

- https://bigscience.huggingface.co/blog/what-language-model-to-train-if-you-have-two-million-gpu-hours

- https://medium.com/nlplanet/two-minutes-nlp-scaling-laws-for-neural-language-models-add6061aece7

- https://lifearchitect.ai/the-sky-is-bigger/

Saturday, December 28, 2024

Debugging Spark Scala/ Java components

In continuation to the earlier post regarding debugging Pyspark, here we show how to debug the Spark Scala/ Java side components. Spark is a distributed processing environment and has Scala Api's for connecting from different languages like Python & Java. The high level Pyspark Architecture is shown here.

For debugging the Spark Scala/ Java components which run within the JVM, it's easy to make use of Java Tooling Options for remote debugging from any compatible IDE such as Idea (Eclipse longer supports Scala). A few points to remember:

- Multiple JVMs in Spark: Spark is a distributed application and involves several components like the Master/ Driver, Slave/ Worker, Executor. In a real world truly distributed setting, each of the components runs in its own separate JVM on separated physical machines. So be clear about the component that you are wanting to debug & set up the Tooling options accordingly targeting the specific JVM instance.

- Two-way connectivity between IDE & JVM: At the same time there should be a two-way network connectivity between the IDE (debugger) & the running JVM instance

- Debugging Locally: Debugging is mostly a dev stage activity & done locally. So it may be better to debug on a Spark cluster running locally. This could be either on a Spark Standalone cluster or a Spark instance run locally (master=local[n]/ local[*]).

Steps:

Environment: Ubuntu-20.04 having Java-8, Spark/Pyspark (ver 2.1.0), Python3.5, Idea-Intelli (ver 2024.3), Maven3.6

(I) Idea Remote JVM Debugger

In Idea > Run/ Debug Config > Edit > Remote JVM Debug.

- Start Debugger in Listen to Remote JVM Mode

- Enable Auto Restart

(II)(a) Debug Spark Standlone cluster

Key features of the Spark Standalone cluster are:

- Separate JVMs for Master, Slave/ Worker, Executor

- All of them can be run from a single dev box, provided enough resources (Mem, CPU) are available

- Scripts inside SPARK_HOME/sbin folder like start-master.sh, start-slave.sh (start-worker.sh), etc are used to start these Spark services

In order to Debug lets say an Executor, a Spark Standalone cluster could be started off with 1 Master, 1 Worker, 1 Executor.

# Start Master (Check http://localhost:8080/ to get Master URL/ PORT)

./sbin/start-master.sh

# Start Slave/ Worker

./sbin/start-slave.sh spark://MASTER_URL:<MASTER_PORT>

# Add Jvm tooling to extraJavaOption to spark-defaults.conf

spark.executor.extraJavaOptions -agentlib:jdwp=transport=dt_socket,server=n,address=localhost:5005,suspend=n

# The value could instead be passed as a conf to SparkContext in Python script:

from pyspark.conf import SparkConf

confVals = SparkConf()

confVals.set("spark.executor.extraJavaOptions","-agentlib:jdwp=transport=dt_socket,server=n,address=localhost:5005,suspend=y")

sc = SparkContext(master="spark://localhost:7077",appName="PythonStreamingStatefulNetworkWordCount1",conf=confVals)

(II)(b) Debug locally with master="local[n]"

- In this case a local Spark cluster is spun up via scripts like spark-shell, spark-submit, etc. located inside the bin/ folder

- The different components Master, Slave/ Worker, Executor all run within one JVM as threads, where the value n is the no of threads, (set n=2)

- Export JAVA_TOOL_OPTIONS before in the terminal from which the Pyspark script will be run

export JAVA_TOOL_OPTIONS="-agentlib:jdwp=transport=dt_socket,server=n,suspend=n,address=5005"

(III) Execute PySpark Python script

python3.5 ${SPARK_HOME}/examples/src/main/python/streaming/network_wordcount.py localhost 9999

This should start off Pyspark & connect the Executor JVM to the waiting Idea Remote debugger instance for debugging.

Thursday, December 26, 2024

Debugging Pyspark in Eclipse with PyDev

An earlier post shows how to run Pyspark (Spark 2.1.0) in Eclipse (ver 2024-06 (4.32)) using the PyDev (ver 12.1) plugin. The OS is Ubuntu-20.04 with Java-8, & an older version of Python3.5 compatible with PySpark (2.1.0).

While the Pyspark code runs fine within Eclipse, when trying to Debug an error is thrown:

Pydev: Unexpected error setting up the debugger: Socket closed".

This is due to a higher Python requirement (>3.6) for pydevd debugger module within PyDev. Details from the PyDev installations page clearly state that Python3.5 is compatible only with PyDev9.3.0. So it's back to square one!

Install/ replace Pydev 12.1 with PyDev 9.3 in Eclipse

- Uninstall Pydev 12.1 (Help > About > Installation details > Installed software > Uninstall PyDev plugin)

- Also manually remove all Pydev folders from eclipse/plugins folder (com.python.pydev.* & org.python.pydev.*)

- Download & Install PyDev 9.3 from Download location as per instructions

- Unzip to eclipse/dropins folder

- Restart eclipse & check (Help > About > Installation details > Installed software)

Test debugging Pyspark

Refer to the steps to Run Pyspark on PyDev in Eclipse, & ensure the PyDev Interpreter is python3.5, PYSPARK_PYTHON variable and PYTHONPATH are correctly setup.

Finally, right click on network_wordcount.py > Debug as > Python run

(Set up Debug Configurations > Arguments & provide program arguments, e.g. "localhost 9999", & any breakpoints in the python code to test).

Wednesday, December 25, 2024

Pyspark in Eclipse with PyDev

This post captures the steps to get Spark (ver 2.1) working within Eclipse (ver 2024-06 (4.32)) using the PyDev (ver 12.1) plugin. The OS is Ubuntu-20.04 with Java-8, Python 3.x & Maven 3.6.

(I) Compile Spark code

The Spark code is downloaded & compiled from a location "SPARK_HOME".

export SPARK_HOME="/SPARK/DOWNLOAD/LOCATION"

cd ${SPARK_HOME}

mvn install -DskipTests=true -Dcheckstyle.skip -o

(Issue: For a "Failed to execute goal org.scalastyle:scalastyle-maven-plugin:0.8.0:check":

Copy scalastyle-config.xml to the sub-project (next to pom.xml) having the error.

(II) Compile Pyspark

(a) Install Pyspark dependencies

- Install Pandoc

sudo apt-get install pandoc

- Install a compatible older Pypandoc (ver 1.5)

pip3 install pypandoc==1.5

- Install a compatible older Python 3.x (ver 3.5)

sudo add-apt-repository ppa:deadsnakes/ppa

sudo apt-get install python3.5

(b) Build Pyspark

cd ${SPARK_HOME}/python

export PYSPARK_PYTHON=python3.5

# Build - creates ${SPARK_HOME}/python/build

python3.5 setup.py

# Dist - creates ${SPARK_HOME}/python/dist

python3.5 setup.py sdist

(c) export PYTHON_PATH

export PYTHONPATH=$PYTHONPATH:${SPARK_HOME}/python/:${SPARK_HOME}/python/lib/py4j-0.10.4-src.zip:${SPARK_HOME}/python/pyspark/shell.py;

(III) Run Pyspark from console

Pyspark setup is done & stanalone examples code should run. Ensure variables ${SPARK_HOME}, ${PYSPARK_PYTHON} & ${PYTHONPATH} are all correctly exported (steps (I), (II)(b) & (II)(c) above):

python3.5 ${SPARK_HOME} /python/build/lib/pyspark/examples/src/main/python/streaming/network_wordcount.py localhost 9999

(IV) Run Pyspark on PyDev in Eclipse

(a) Eclipse with PyDev plugin installed:

Set-up tested on Eclipse (ver 2024-06 (4.32.0)) and PyDev plugin (ver 12.1x).

(b) Import the spark project in Eclipse

There would be compilation errors due to missing Spark Scala classes.

(c) Add Target jars for Spark Scala classes

Eclipse no longer has support for Scala so the corresponding Spark Scala classes are missing. A work around is to add the Scala target jars compiled using mvn (in step (I) above) manually to:

spark-example > Properties > Java Build Path > Libraries

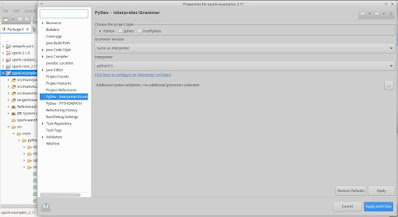

(d) Add PyDev Interpreter for Python3.5

Go to: spark-example > Properties > PyDev - Interpreter/ Grammar > Click to confure an Interpreter not listed > Open Interpreter Preferences Page > New > Choose from List:

& Select /usr/bin/python3.5

On the same page, under the Environment tab add a variable named "PYSPARK_PYTHON" having value "python3.5"

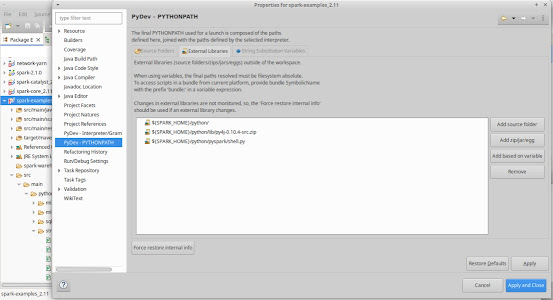

(e) Set up PYTHONPATH for PyDev

spark-example > Properties > PyDev - PYTHONPATH

- Under String Substitution Variables add a variable with name "SPARK_HOME" & value "/SPARK/DOWNLOAD/LOCATION" (same location added in Step (I)).

- Under External Libraries, Choose Add based on variable, add 3 entries:

${SPARK_HOME}/python/

${SPARK_HOME}/python/lib/py4j-0.10.4-src.zip

${SPARK_HOME}/python/lib/py4j-0.10.4-src.zip

With that Pyspark should be properly set-up within PyDev.

(f) Run Pyspark from Eclipse

Right click on network_wordcount.py > Run as > Python run

(You can further change Run Configurations > Arguments & provide program arguments, e.g. "localhost 9999")

Monday, November 25, 2024

Getting Localstack Up and Running

In continuation to the earlier post on mocks for clouds, this article does a deep dive into getting up & running with Localstack. This is a consolidation of the steps & best practices shared here, here & here. The Localstack set-up is on a Ubuntu-20.04, with Java-8x, Maven-3.8x, Docker-24.0x.

(I) Set-up

# Install awscli

sudo apt-get install awscli

# Install localstack ver 3.8

## Issue1: By default pip pulls in version 4.0, which gives an error:

## ERROR: Could not find a version that satisfies the requirement localstack-ext==4.0.0 (from localstack)

python3 -m pip install localstack==3.8.1

--

# Add to /etc/hosts

127.0.0.1 localhost.localstack.cloud

127.0.0.1 s3.localhost.localstack.cloud

--

# Configure AWS from cli

aws configure

aws configure set default.region us-east-1

aws configure set aws_access_key_id test

aws configure set aws_secret_access_key test

## Manually configure AWS

Add to ~/.aws/config

endpoint_url = http://localhost:4566

## Add mock credentials

Add to ~/.aws/credentials

aws_access_key_id = test

aws_secret_access_key = test

--

# Download docker images needed by the Lambda function

## Issue 2: Do this before hand, Localstack gets stuck

## at the download image stage unless it's already available

## Pull java:8.al2

docker pull public.ecr.aws/lambda/java:8.al2

## Pull nodejs (required for other nodejs Lambda functions)

docker pull public.ecr.aws/lambda/nodejs:18

## Check images downloaded

docker image ls

(II) Start Localstack

# Start locally

localstack start

# Start as docker (add '-d' for daemon)

## Issue 3: Local directory's mount should be as per sample docker-compose

docker-compose -f docker-compose-localstack.yaml up

# Localstack up on URL's

http://localhost:4566

http://localhost.localstack.cloud:4566

# Check Localstack Health

curl http://localhost:4566/_localstack/info

curl http://localhost:4566/_localstack/health

(III) AWS services on Localstack from CLI

(a) S3

# Create bucket named "test-buck"

aws --endpoint-url=http://localhost:4566 s3 mb s3://test-buck

# Copy item to bucket

aws --endpoint-url=http://localhost:4566 s3 cp a1.txt s3://test-buck

# List bucket

aws --endpoint-url=http://localhost:4566 s3 ls s3://test-buck

--

(b) Sqs

# Create queue named "test-q"

aws --endpoint-url=http://localhost:4566 sqs create-queue --queue-name test-q

# List queues

aws --endpoint-url=http://localhost:4566 sqs list-queues

# Get queue attribute

aws --endpoint-url=http://localhost:4566 sqs get-queue-attributes --queue-url http://sqs.us-east-1.localhost.localstack.cloud:4566/000000000000/test-q --attribute-names All

--

(c) Lambda

aws --endpoint-url=http://localhost:4566 lambda list-functions

# Create Java function

aws --endpoint-url=http://localhost:4566 lambda create-function --function-name test-j-div --zip-file fileb://original-java-basic-1.0-SNAPSHOT.jar --handler example.HandlerDivide::handleRequest --runtime java8.al2 --role arn:aws:iam::000000000000:role/lambda-test

# List functions

aws --endpoint-url=http://localhost:4566 lambda list-functions

# Invoke Java function

aws --endpoint-url=http://localhost:4566 lambda invoke --function-name test-j-div --payload '[200,9]' outputJ.txt

# Delete function

aws --endpoint-url=http://localhost:4566 lambda delete-function --function-name test-j-div

(IV) AWS services on Localstack from Java-SDK

# For S3 & Sqs - localstack-aws-sdk-examples, java sdk

# For Lambda - localstack-lambda-java-sdk-v1

Monday, August 12, 2024

To Mock a Cloud

Cloud hosting has been the norm for a while now. Saas, Paas, Iaas, serverless, AI whatever the form may be, organizations (org) need to have a digital presence on the cloud.

Cloud vendors offer hundreds of features and services such as 24x7 availability, fail-safe, load-balanced, auto-scaling, disaster resilient distributed, edge-compute, AI/ Ml clusters, LLMs, Search, Database, Datawarehouses among many others right off-the-shelf. They additionally provide a pay-as-you-go model only for the services being used. Essentially everything that any org could ask for today & in the future!

But it's not all rosy. The cloud bill (even though pay-as-you-go) does burn a hole in the pockets. While expenses for the live production (prod) environment is necessary, costs for the other dev, test, etc, internal environments could be largely reduced by replacing the real Cloud with a Mock Cloud. This would additionally, speed up dev and deployment times and make bug fixes and devops much quicker & streamlined.

As dev's know mocks, emulators, etc are only as good as their implementation - how true they are to the real thing. It's a pain to find new/ unknown bugs on the prod only because it's an env very different from dev/ test. Which dev worth his weight in salt (or gold!) hasn't seen this ever?

While using containers to mock up cloud services was the traditional way of doing it, a couple of recent initiatives like Localstack, Moto, etc seem promising. Though AWS focussed for now, support for others are likely soon. Various AWS services like s3, sns, sqs, ses, lambda, etc are already supported at different levels of maturity. So go explore mocks for cloud & happy coding!

Monday, July 22, 2024

Cloudera - Streaming Data Platform

Cloudera has a significantly mature streaming offering on their Data Platform. Data from varied sources such as rich media, text, chat, message queues, etc is brought in to their unified DataFlow platform using Nifi or other ETL/ ELT. After processing these can be directed to one or more of the Op./ App DB, Data Lake (Iceberg), Vector DB post embedding (for AI/ ML), etc.

Streaming in AI/ ML apps help to provide a real-time context that can be leveraged by the apps. Things like feedback mechanism, grounding of outputs, avoiding hallucinations, model evolution, etc all of them require real-time data to be available. So with a better faster data, MLOPs platform Cloudera is looking to improve the quality of the ML apps itself running on them.

Cloudera has also made it easy to get stared with ML with their cloud based Accelarators (AMP). AMPs have support for not just Cloudera built modules, but even those from others like Pinecode, AWS, Hugging Face, etc & the ML community. Apps for Chats, Text summarization, Image analysis, Time series, LLMs, etc are available for use off the shelf. As always, Cloudera continues to offer all deployment options like on-premise, cloud & hybrid as per customer's needs.

Thursday, May 30, 2024

Mixture of Experts (MoE) Architecture

Enhancement to LLMs to align with expert models paradigm.

- Each expert implemented as a separate Feed Forward Network (FFN) (though other trainable ML models Backprop should work).

- The expert FFNs are introduced in parallel to the existing FFN layer after the Attention Layer.

- Decision to route tokens to the expert is by a router.

- Router is implemented a linear layer followed by a Softmax for probability of each expert, to pick the top few.

Saturday, November 11, 2023

Starting off with Databases on Win

Options getting started with DB on Windows:

1) Try MySql (or Postgre) DB Online via Browser:

• https://onecompiler.com/mysql/3zt5uh4dc

• https://www.w3schools.com/mysql/mysql_exercises.asp

• https://www.w3schools.com/mysql/exercise.asp

• https://www.mycompiler.io/online-mysql-editor

• https://www.mycompiler.io/new/mysql

• https://extendsclass.com/postgresql-online.html

2) Install MySql on Windows:

• https://dev.mysql.com/downloads/installer/

3) Run Windows Virtual Machine (VM) with a MS Sql DB installed in that VM:

3.1) Use either VMPlayer or VirtualBox as the virtualization software

• VMWare Player: https://www.vmware.com/in/products/workstation-player.html

• Oracle VirtualBox: https://www.virtualbox.org/wiki/Downloads

3.2) Download the corresponding VM:

• https://developer.microsoft.com/en-us/windows/downloads/virtual-machines/

3.3) Login to the VM & install MS Sql server in it

Hope it helps.

Wednesday, March 31, 2021

Flip side to Technology - Extractivism, Exploitation, Inequality, Disparity, Ecological Damage

Anatomy of an AI system is a real eye-opener. This helps us to get a high level view of the enormous complexity and scale of the supply chains, manufacturers, assemblers, miners, transporters and other links that collaborate at a global scale to help commercialize something like an Amazon ECHO device.

The authors explain how extreme exploitation of human labour, environment and resources that happen at various levels largely remain unacknowledged and unaccounted for. Right from mining of rare elements, to smelting and refining, to shipping and transportation, to component manufacture and assembly, etc. these mostly happen under in-human conditions with complete disregard for health, well-being, safety of workers who are given miserable wages. These processes also cause irreversible damage to the ecology and environment at large.

Though Amazon Echo as an AI powered self-learning device connected to cloud-based web-services opens up several privacy, safety, intrusion and digital exploitation concerns for the end-user, yet focusing solely on Echo would amount to missing the forest for the trees! Most issues highlighted here would be equally true of technologies from many other traditional and non-AI, or not-yet-AI, powered sectors like automobiles, electronics, telecom, etc. Time to give a thought to these issues and bring a stop to the irreversible damage to humans lives, well-being, finances, equality, and to the environment and planetary resources!

Monday, March 29, 2021

Doing Better Data Science

In the article titled "Field Notes #1 - Easy Does It" author Will Kurt highlights a key aspect of doing good Data Science - Simplicity. This includes first and foremost getting a good understanding of the problem to be solved. Later among the hypothesis & possible solutions/ models to favour the simpler ones. Atleast giving the simpler ones a fair/ equal chance at proving their worth in tests employing standardized performance metrics.

Another article of relevance for Data Scientists is from the allied domain of Stats titled "The 10 most common mistakes with statistics, and how to avoid them". The article based on the paper in eLife by the authors Makin and Orban de Xivry lists out the ten most common statistical mistakes in scientific research. The paper also includes tips for both the Reviewers to detect such mistakes and for Researchers (authors) to avoid them.

Many of the issues listed are linked to the p-value computations which is used to establish significance of statistical tests & draw conclusions from it. However, its incorrect usage, understanding, corrections, manipulation, etc. results in rendering the test ineffective and insignificant results getting reported. Issues of Sampling and adequate Control Groups along with faulty attempts by authors to establish Causation where none exists are also common in scientific literature.

As per the authors, these issues typically happen due to ineffective experimental designs, inappropriate analyses and/or flawed reasoning. A strong publication bias & pressure on researchers to publish significant results as opposed to correct but failed experiments makes matters worse. Moreover senior researchers entrusted to mentor juniors are often unfamiliar with fundamentals and prone to making these errors themselves. Their aversion to taking criticism becomes a further roadblock to improvement.

While correct mentoring of early stage researchers will certainly help, change can also come in by making science open access. Open science/ research must include details on all aspects of the study and all the materials involved such as data and analysis code. On the other hand, at the institutions and funders level incentivizing correctness over productivity can also prove beneficial.

Friday, April 17, 2020

Analysis of Deaths Registered In Delhi Between 2015 - 2018

The detailed reports can be downloaded in the form of pdf files from the website of the Department of Economics and Statistics, Delhi Government. Anonymized, cleaned data is made available in the form of tables in Section Titled "STATISTICAL TABLES" in the pdf files. The births and deaths data is aggregated by attributes like age, profession, gender, etc.

Approach

In this article, an analysis has been done of tables D-4 (DEATHS BY SEX AND MONTH OF OCCURRENCE (URBAN)), D-5 (DEATHS BY TYPE OF ATTENTION AT DEATH (URBAN)), & D-8 (DEATHS BY AGE, OCCUPATION AND SEX (URBAN)) from the above pdfs. Data from for the four years 2015-18 (presently downloadable from the department's website) has been used from these tables for evaluating mortality trends in Delhi for the three most populous Urban districts of North DMC, South DMC & East DMC for the period 2015-18.

Analysis

1) Cyclic Trends: Data for absolute death counts for period Jan-2015 to Dec-2018 is plotted in table "T1: Trends 2015-18". Another view of the same data is as monthly percentage of annual shown in table "T-2: Month/ Year_Total %".

Both tables clearly show that there is a spike in the number of deaths in the colder months of Dec to Feb. About 30% of all deaths in Delhi happen within these three months. The percentages are fairly consistent for both genders and across all 3 districts of North, South & East DMCs.

As summer sets in from March the death percentages start dropping. Reaching the lowest points below 7% monthly for June & July as the monsoons set in. Towards the end of monsoons, a second spike is seen around Aug/ Sep followed by a dip in Oct/ Nov before the next winters when the cyclic trends repeat.

Trends reported above are also seen with moving averages, plotted in Table "T-3: 3-Monthly Moving Avg", across the three districts and genders. Similar trends, though not plotted here, are seen in the moving averages of other tenures (such as 2 & 4 months).

2) Gender Differences: In terms of differences between genders, far more deaths of males as compared to females were noted during the peak winters on Delhi between 2015-18. This is shown in table "T4: Difference Male & Female".

From a peak gap of about 1000 in the colder months it drops to about 550-600 range in the summer months, particularly for the North & South DMCs. A narrower gap is seen the East DMC, largely attributable to its smaller population size as compared to the other two districts.

Table "T5: Percentage Male/ Female*100" plots the percentage of male deaths to females over the months. The curves of the three districts though quite wavy primarily stay within the rough band of 1.5 to 1.7 times male deaths as compared to females. The spike of the winter months is clearly visible in table T5 as well.

3) Cross District Differences in Attention Type: Table "T6: Percentage Attention Type" plots the different form of Attention Type (hospital, non-institutional, doctor/ nurse, family, etc.) received by the person at the time of death.

While in East DMC, over 60% people were in institutional care the same is almost 20% points lower for North & South DMCs. For the later two districts the percentage for No Medical Attention received has remained consistently high, the South DMC being particularly high over 40%.

4) Vulnerable Age: Finally, a plot of the vulnerable age groups is shown in table "T7: Age 55 & Above". A clear spike in death rates is seen in the 55-64 age group, perhaps attributable to the act of retirement from active profession & subsequent life style changes. The gender skewness within the 55-64 age group may again be due to the inherent skewness in the workforce, having far higher number of male workers, who would be subjected to the effects of retirement. This aspect could be probed further from other data sources.

Age groups in-between 65-69 show far lower mortality rates as they are perhaps better adjusted and healthier. Finally, a spike is seen in the number of deaths in the super senior citizens aged 70 & above, which must be largely attributable to their advancing age resulting in frail health.

Conclusion

The analysis in this article was done using data published by the Directorate of Economics and Statistics & Office of Chief Registrar (Births & Deaths), Government of National Capital Territory (NCT) of Delhi annually on registrations of births and deaths within the NCT of Delhi. Data of mortality from the three most populous districts of North DMC, South DMC and East DMC of Delhi were analysed. Some specific monthly, yearly and age group related trends are reported here.

The analysis can be easily performed over the other districts of Delhi, as well as for data from current years as and when those are made available by the department. The data may also be used for various modeling and simulation purposes and training machine learning algorithms. A more real-time sharing of raw (anonymized, aggregated) data by the department via api's or other data feeds may be looked at in the future. These may prove beneficial for the research and data science community who may put the data to good use for public health and welfare purposes.

Resouces:

Downloadable Datasheets For Analysis: