This post captures the steps to get Spark (ver 2.1) working within Eclipse (ver 2024-06 (4.32)) using the PyDev (ver 12.1) plugin. The OS is Ubuntu-20.04 with Java-8, Python 3.x & Maven 3.6.

(I) Compile Spark code

The Spark code is downloaded & compiled from a location "SPARK_HOME".

export SPARK_HOME="/SPARK/DOWNLOAD/LOCATION"

cd ${SPARK_HOME}

mvn install -DskipTests=true -Dcheckstyle.skip -o

(Issue: For a "Failed to execute goal org.scalastyle:scalastyle-maven-plugin:0.8.0:check":

Copy scalastyle-config.xml to the sub-project (next to pom.xml) having the error.

(II) Compile Pyspark

(a) Install Pyspark dependencies

sudo apt-get install pandoc

- Install a compatible older Pypandoc (ver 1.5)

pip3 install pypandoc==1.5

sudo add-apt-repository ppa:deadsnakes/ppa

sudo apt-get install python3.5

(b) Build Pyspark

cd ${SPARK_HOME}/python

export PYSPARK_PYTHON=python3.5

# Build - creates ${SPARK_HOME}/python/build

python3.5 setup.py

# Dist - creates ${SPARK_HOME}/python/dist

python3.5 setup.py sdist

(c) export PYTHON_PATH

export PYTHONPATH=$PYTHONPATH:${SPARK_HOME}/python/:${SPARK_HOME}/python/lib/py4j-0.10.4-src.zip:${SPARK_HOME}/python/pyspark/shell.py;

(III) Run Pyspark from console

Pyspark setup is done & stanalone examples code should run. Ensure variables ${SPARK_HOME}, ${PYSPARK_PYTHON} & ${PYTHONPATH} are all correctly exported (steps (I), (II)(b) & (II)(c) above):

python3.5 ${SPARK_HOME} /python/build/lib/pyspark/examples/src/main/python/streaming/network_wordcount.py localhost 9999

(IV) Run Pyspark on PyDev in Eclipse

(a) Eclipse with PyDev plugin installed:

Set-up tested on Eclipse (ver 2024-06 (4.32.0)) and PyDev plugin (ver 12.1x).

(b) Import the spark project in Eclipse

There would be compilation errors due to missing Spark Scala classes.

(c) Add Target jars for Spark Scala classes

Eclipse no longer has support for Scala so the corresponding Spark Scala classes are missing. A work around is to add the Scala target jars compiled using mvn (in step (I) above) manually to:

spark-example > Properties > Java Build Path > Libraries

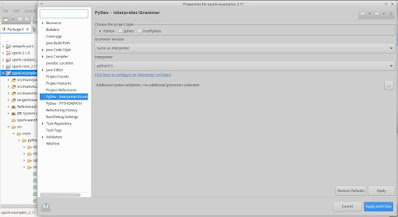

(d) Add PyDev Interpreter for Python3.5

Go to: spark-example > Properties > PyDev - Interpreter/ Grammar > Click to confure an Interpreter not listed > Open Interpreter Preferences Page > New > Choose from List:

& Select /usr/bin/python3.5

On the same page, under the Environment tab add a variable named "PYSPARK_PYTHON" having value "python3.5"

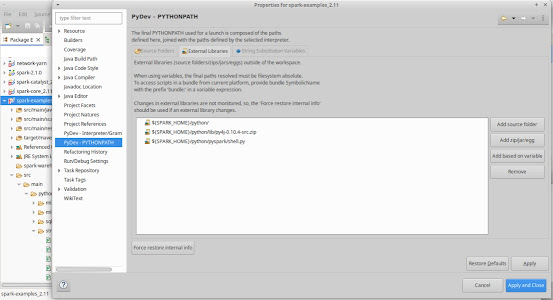

(e) Set up PYTHONPATH for PyDev

spark-example > Properties > PyDev - PYTHONPATH

- Under String Substitution Variables add a variable with name "SPARK_HOME" & value "/SPARK/DOWNLOAD/LOCATION" (same location added in Step (I)).

- Under External Libraries, Choose Add based on variable, add 3 entries:

${SPARK_HOME}/python/

${SPARK_HOME}/python/lib/py4j-0.10.4-src.zip

${SPARK_HOME}/python/lib/py4j-0.10.4-src.zip

With that Pyspark should be properly set-up within PyDev.

(f) Run Pyspark from Eclipse

Right click on network_wordcount.py > Run as > Python run

(You can further change Run Configurations > Arguments & provide program arguments, e.g. "localhost 9999")