With widespread adoption of large Machine Learning (ML) models, there's a real need for understanding the workings of the models. The model otherwise just appears to be a black-box doing its thing without the end user really knowing the whys/ hows behind the models responses, choices, decisions, etc. Looking inside the model - the white-box approach - while possible is simply not practical for 99.99..9% users.

Local Interpretable Model-Agnostic Explanations (LIME) & Shapley Additive Explanations (SHAP) are two black-box techniques that help explaining the workings of such models. The key idea behind both being:

- To generate some (synthetic) input data from actual data with some of the features (such as income, age, etc) of the data altered at random.

- Then to use the generated input data with the model and use the output to understand the effects of the altered features (one or more/ combinations) on the output.Thereby, understand the importance/ relevance of the features on the outputs of the model.

- For e.g. In a loan approval/ rejection scenario by altering two features income levels & gender in the input and testing one might discover that Income levels has an effect on the decision, but no gender.

With that background, let's look at SHAP for language models that take texts as input. Here features are the words (tokens) that comprise the input string.

For an input like: "Glad to see you",

The features are: "Glad", "to", "see", "you"

Shap would explain the impact of each word (token) on the output of the model by passing in various altered data with words MASKED:

"* to see you", "Glad to * you", ...

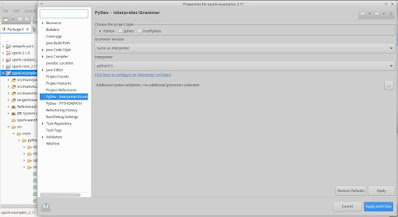

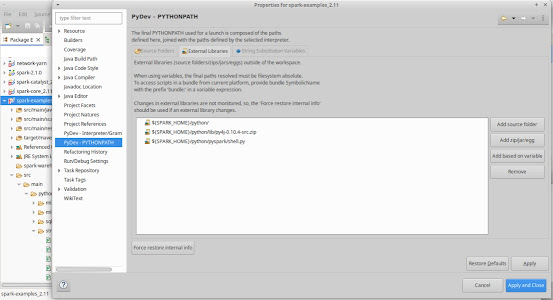

TextClassificationTorchShap.py shows how SHAP works with the Text Classification Model trained using the Imdb dataset. The code requires shap to be installed:

pip3 install shap

In terms of its working it loads up the pre-trained Text Classification model and vocabulary. Next it plugs in to the shap library using a shap custom tokenizer to generate token_ids & offsets for the given input data.

masker = maskers.Text(custom_tokenizer, mask_token=SPECIAL_TOKEN_UNK)

explainer = shap.Explainer(predict,masker=masker)

Finally, shap is called with some sample input text which has words masked at random. Shap collects the outputs which can be used to generate a visual report of the impact of the different words as seen below.

The model classifies any given input text as either POSITIVE (score near 1) or NEGATIVE (score near 0). The figure is showing output for two input data: "This is a great one to watch." & "What a long drawn boring affair to the end credits."

Let's look first at "This is a great one to watch.":

- There is a base value = 0.539161 which is the model's output for a completely MASKED out input, i.e. "* * * * * * *"

- The words "to w..", "This is" move up the score to 0.7

- In addition, the words "a great" move up the score to 0.996787, the actual output of the model for the complete input text "This is a great one to watch."

- The model rightly classifies this as POSITIVE with a score of 0.996787 (close to 1)

Similarly for the text "What a long drawn boring affair to the end credits.":

- Completely masked base value = 0.539161.

- The key words in this case are "boring affair to the".

- The text is rightly classified as NEGATIVE with a score of 0.0280297 (close to 0).