Mlflow is a leading open source framework for managing AI/ ML workflows. Mlflow allows tracking, monitoring and generally visualizing the e2e ML project lifecycle. A handy ops side tool that improves over interpretability of AI/ ML projects.

Key Mlflow concepts are intuitively named such as ML Projects, containing Models, on which Runs of Experiments are done that in turn get Tagged with meaningful humanly relevant labels and so on.

While Mlflow is a Python native library with integrations with all the leading Python AI/ ML frameworks such as OpenAI, Langchain, Llamaindex, etc there are also Mlflow API endpoints for wider portability.

There's also a Mlflow Java Api for use from the Java ecosystem. The corresponding Mlflow Java client (maven plugin, etc) works well with the API. To get started with the mlflow using Java:

(I) Install mlflow (Getting started guide)

$ pip install mlflow

This installs mlflow to the users .local folder:

~/.local/bin/mlflow

(II) Start Local mlflow server (simple without authentication)

$ mlflow server --host 127.0.0.1 --port 8080

mlflow server should be running on

http://127.0.0.1:8080

(III) Download mlflower repo (sample Java client code)

Next clone the mlflower repo which has some sample code showing working of the mlflow Java client.

- The class Mlfclient shows a simple use case of Creating an Experiment:

client.createExperiment(experimentName);

Followed by a few runs of logging some Parameters, Metrics, Artifacts:

run.logParam();

run.logMetric();

run.logArtifact()

- Run Hierarchy: Class NestedMlfClient shows nesting hierarchy of Mlflow runs

Parent Run -> Child Run -> Grand Child Run ->.... & so on

(IV) Start Local mlflow server (with Basic Authentication)

While authentication is crucial for managing workflows, Mlflow only provided Basic Auth till very recently. Version 3.5 onwards has better support for various auth provides, SSO, etc. For now only Mlflow Basic Auth integration is shown.

# Start server with Basic Auth

mlflow server --host 127.0.0.1 --port 8080 --app-name basic-auth

Like previously, mlflow server should start running on

http://127.0.0.1:8080

But requiring a login credential this time to access the page. The default admin credentials are mentioned on mlflow basic-auth-http.

- The class BasicAuthMlfclient shows the Java client using BasicMlflowHostCreds to connect to Mlflow with basic auth.

new MlflowClient(new BasicMlflowHostCreds(TRACKING_URI, USERNAME, PASSWORD));

(V) Deletes Soft/ Hard

- Experiments, Runs, etc created within mlflow can be deleted from the ui (& client). The deletes are however only Soft, and get stored somewhere in a Recycle Bin, not visible on the UI.

- Hard/ permanent deletes can be effected from the mlflow cli

# Set mlflow server tracking uri

export MLFLOW_TRACKING_URI=http://127.0.0.1:8080

# Clear garbage

mlflow gc

(VI) Issues

- MlflowContext.withActiveRun() absorbs exception without any logs, & the run status set to RunStatus.FAILED.

- To debug runs show as failed on the mlflow UI, its best to put explicit try-catch on the client to find the cause.

- Unable to upload artifacts since cli looks for python (& not python3) on path to run.

- Error message: Failed to exec 'python -m mlflow.store.artifact.cli', needed to access artifacts within the non-Java-native artifact store at 'mlflow-artifacts:

- The dev box (Ubuntu ver 20.04) has python3 (& not python) installed.

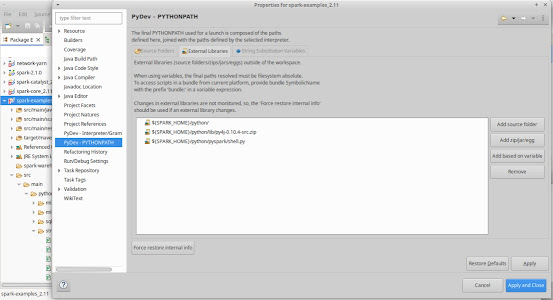

- Without changing the dev box a simple fix is to set/ export the environment variable MLFLOW_PYTHON_EXECUTABLE (within the IDE, shell, etc) to whichever python lib is installed on the box:

MLFLOW_PYTHON_EXECUTABLE=/usr/bin/python3

So with that keep the AI/ Ml projects flowing!