In continuation to the earlier post regarding debugging Pyspark, here we show how to debug the Spark Scala/ Java side components. Spark is a distributed processing environment and has Scala Api's for connecting from different languages like Python & Java. The high level Pyspark Architecture is shown here.

For debugging the Spark Scala/ Java components which run within the JVM, it's easy to make use of Java Tooling Options for remote debugging from any compatible IDE such as Idea (Eclipse longer supports Scala). A few points to remember:

- Multiple JVMs in Spark: Spark is a distributed application and involves several components like the Master/ Driver, Slave/ Worker, Executor. In a real world truly distributed setting, each of the components runs in its own separate JVM on separated physical machines. So be clear about the component that you are wanting to debug & set up the Tooling options accordingly targeting the specific JVM instance.

- Two-way connectivity between IDE & JVM: At the same time there should be a two-way network connectivity between the IDE (debugger) & the running JVM instance

- Debugging Locally: Debugging is mostly a dev stage activity & done locally. So it may be better to debug on a Spark cluster running locally. This could be either on a Spark Standalone cluster or a Spark instance run locally (master=local[n]/ local[*]).

Steps:

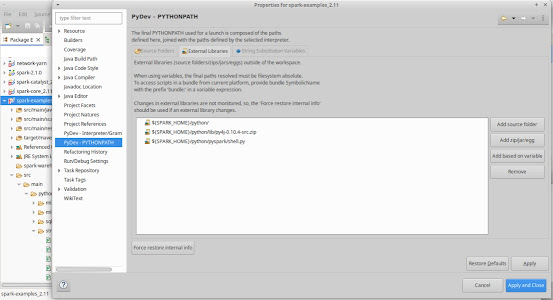

Environment: Ubuntu-20.04 having Java-8, Spark/Pyspark (ver 2.1.0), Python3.5, Idea-Intelli (ver 2024.3), Maven3.6

(I) Idea Remote JVM Debugger

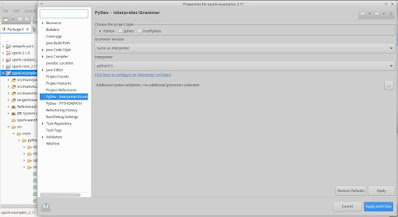

In Idea > Run/ Debug Config > Edit > Remote JVM Debug.

- Start Debugger in Listen to Remote JVM Mode

- Enable Auto Restart

(II)(a) Debug Spark Standlone cluster

Key features of the Spark Standalone cluster are:

- Separate JVMs for Master, Slave/ Worker, Executor

- All of them can be run from a single dev box, provided enough resources (Mem, CPU) are available

- Scripts inside SPARK_HOME/sbin folder like start-master.sh, start-slave.sh (start-worker.sh), etc are used to start these Spark services

In order to Debug lets say an Executor, a Spark Standalone cluster could be started off with 1 Master, 1 Worker, 1 Executor.

# Start Master (Check http://localhost:8080/ to get Master URL/ PORT)

./sbin/start-master.sh

# Start Slave/ Worker

./sbin/start-slave.sh spark://MASTER_URL:<MASTER_PORT>

# Add Jvm tooling to extraJavaOption to spark-defaults.conf

spark.executor.extraJavaOptions -agentlib:jdwp=transport=dt_socket,server=n,address=localhost:5005,suspend=n

# The value could instead be passed as a conf to SparkContext in Python script:

from pyspark.conf import SparkConf

confVals = SparkConf()

confVals.set("spark.executor.extraJavaOptions","-agentlib:jdwp=transport=dt_socket,server=n,address=localhost:5005,suspend=y")

sc = SparkContext(master="spark://localhost:7077",appName="PythonStreamingStatefulNetworkWordCount1",conf=confVals)

(II)(b) Debug locally with master="local[n]"

- In this case a local Spark cluster is spun up via scripts like spark-shell, spark-submit, etc. located inside the bin/ folder

- The different components Master, Slave/ Worker, Executor all run within one JVM as threads, where the value n is the no of threads, (set n=2)

- Export JAVA_TOOL_OPTIONS before in the terminal from which the Pyspark script will be run

export JAVA_TOOL_OPTIONS="-agentlib:jdwp=transport=dt_socket,server=n,suspend=n,address=5005"

(III) Execute PySpark Python script

python3.5 ${SPARK_HOME}/examples/src/main/python/streaming/network_wordcount.py localhost 9999

This should start off Pyspark & connect the Executor JVM to the waiting Idea Remote debugger instance for debugging.